INF385T.3/CS395T: Human Computation and Crowdsourcing (Spring 2022)

THIS COURSE IS CROSS-LISTED; IF ONE SECTION IS FULL, PLEASE ENROLL IN THE OTHER. All students will receive the same credit toward graduation requirements regardless of which section they enroll in.

ON THE WAITLIST? I will do my best to ensure that any graduate student who wants to be in the class can enroll. Show up the first day of class and I will probably be able to get you in.

Registration notes specific to Computer Science (CS) students:

- Masters students: Want Minor Coursework credit? Email gradoffice@cs.utexas.edu to obtain prior approval.

- Masters students: Want Major Coursework credit? Email gradoffice@cs.utexas.edu if enrolled in the INF listing (to count it as a CS395T).

- PhD students may count this class toward the Depth requirement.

Instructor: Matt Lease (see "About the instructor" at bottom • publication list)

Day and Time: Mondays 12-2:45pm

Location: UTA 1.212

Unique IDs: 28309 (INF) and 51709 (CS)

Past offerings: Fall 2020 • Fall 2017 • Fall 2015 (2015 and earlier now broken) • Spring 2014 • Spring 2012 • Spring 2011

Textbook: none required, all readings are available online

Course summary. This is a graduate research seminar. We will review the state-of-the-art in human computation and crowdsourcing by reading and discussing research articles. Students will also execute assignments and conduct an orginal, culminating final project. The course culminates in final presentations and term papers on course projects.

Intended audience. As a graduate research seminar, the class is primarily intended for students interested in learning about state-of-the-art research in the field, either to conduct original research in the field or to apply this understanding in related fields or professional work. The class also serves students who are curious and want to learn to enhance their knowledge and understanding of how this field and related technologies are disrupting traditional work and data processing practices.

Prerequisites - See Syllabus above.

About human computation and crowdsourcing. The acceleration of artificial intelligence (AI) and machine learning (ML) capabilities is giving rise to AI systems today that are more powerful and ubiquitous than ever before. However, AI systems almost always rely on people in one or more ways. Firstly, people often provide the labels (or annotations) needed to train AI systems. Secondly, because AI capabilities remain limited and imperfect, we compensate for AI limitations by using people to augment AI systems. Such human-in-the-loop (HITL) systems combine man and machine to realize a whole greater than the sum of its parts. The human(s) involved may be one or more (1) end-users who partner with the AI system to make a decision or accomplish a task, or (2) on-demand internet workers who work behind the scenes when called upon by the AI to complete a processing task it cannot complete on its own.

In general, human computation is the use of people rather than machines to perform certain computations for which human competency continues to exceed that of state-of-the-art algorithms (e.g. AI-hard tasks such as interpreting text or images). Just as cloud computing now enables us to harness vast Internet computing resources on demand, crowd computing lets us similarly call upon the online crowd to manually perform human computation tasks on-demand. As crowd computing expands traditional accuracy-time-cost tradeoffs associated with purely-automated approaches, the potential to achieve these enhanced capabilities has begun to change how we design and implement intelligent systems.

While early work in crowd computing focused principally on data labeling to train automated systems, we are increasingly seeing a new form of hybrid, socio-computational system emerge which harnesses collective intelligence of the crowd in combination with automated AI at run-time in order to better tackle difficult processing tasks. As such, we find ourselves today in an exciting new design space, where the potential capabilities of tomorrow.s computing systems is seemingly limited only by our imagination and creativity in designing algorithms to compute with crowds as well as silicon.

Examples of human computation systems: DuoLingo · EyeWire · FoldIt · GalaxyZoo · MonoTrans · Legion:Scribe · Mechanical Turk · PlateMate · ReCaptcha · Soylent · Ushahidi · VizWiz

Introductions to Human Computation and Crowdsourcing:

- Alexander Quinn and Benjamin B. Bederson. Human computation: a survey and taxonomy of a growing field. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 2011.

- Aniket Kittur, Jeffrey V. Nickerson, Michael Bernstein, Elizabeth Gerber, Aaron Shaw, John Zimmerman, Matthew Lease, and John Horton. The Future of Crowd Work. In Proceedings of the 2013 conference on Computer supported cooperative work, pp. 1301-1318. ACM, 2013.

- Edith Law and Luis von Ahn. Human computation. Synthesis Lectures on Artificial Intelligence and Machine Learning 5, no. 3 (2011): 1-121.

- Matthew Lease and Omar Alonso. Crowdsourcing and Human Computation, Introduction. Encyclopedia of Social Network Analysis and Mining (ESNAM), pages 304-315, September 2014.

- See also: AAAI Crowdsourcing and Human Computation (HCOMP) conference proceedings

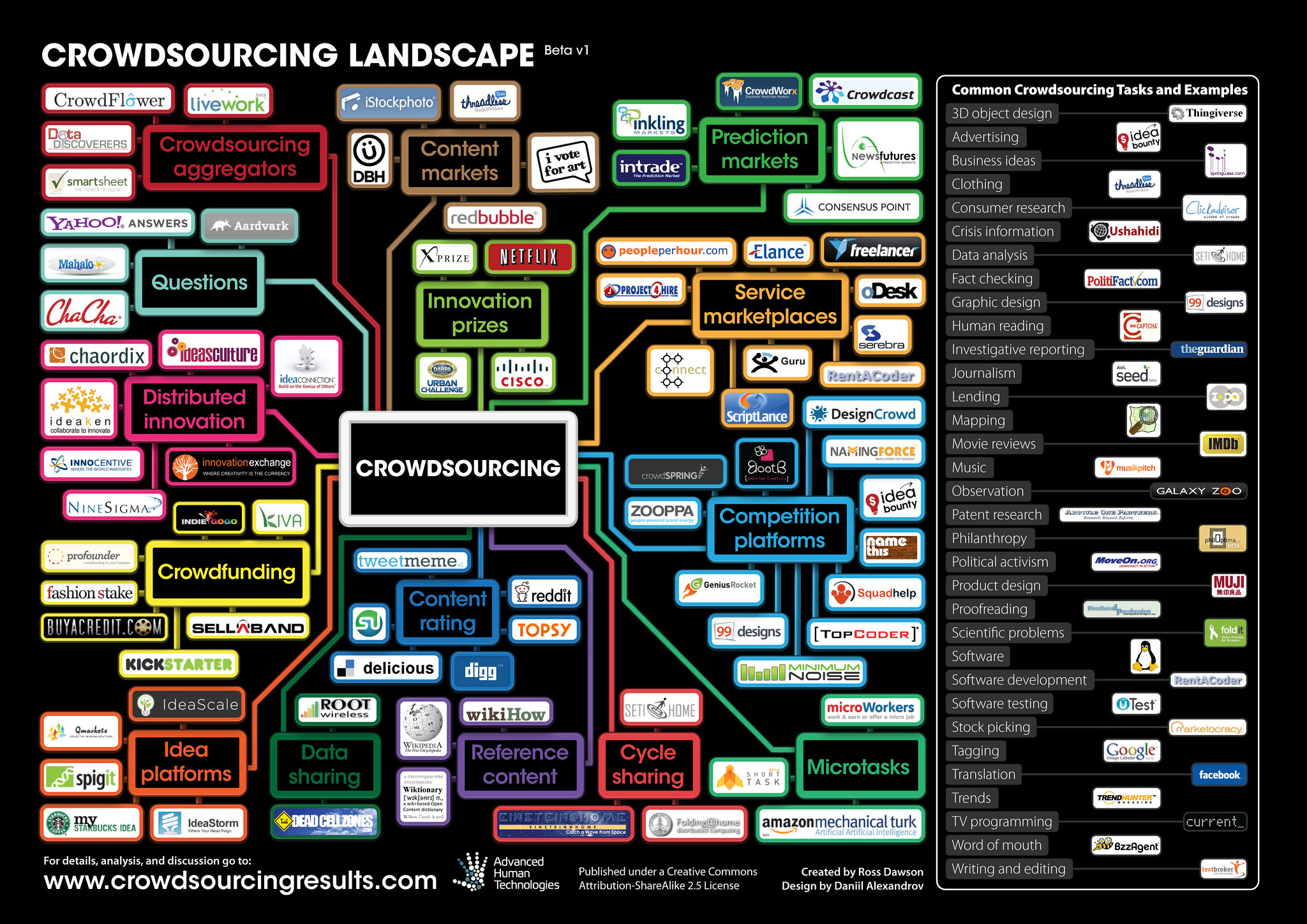

Advances in research have also translated into a thriving private sector, with many existing startups and opportunities for more.

Want to publish original research?

In previous offerings of the course, several of the best, most innovative course projects have

been extended beyond the semester until the work was in publishable form. If you have a great

idea and are willing to work hard to get it published, the course project provides a great

opportunity to refine the idea and get started developing the project with regular feedback and

advising from the instructor. Examples of past course projects that were subsequently published include (see publications for links):

- Brandon Dang, Martin J. Riedl, and Matthew Lease. But Who Protects the Moderators? The Case of Crowdsourced Image Moderation. In 6th AAAI Conference on Human Computation and Crowdsourcing (HCOMP): Works-in-Progress Track, 2018. 5 pages, peer-reviewed, non-archival. Demo URL updated since publication. [ bib | pdf | demo | blog-post | sourcecode | conference-website | slides ]

- Akash Mankar, Riddhi J. Shah, and Matthew Lease. Design Activism for Minimum Wage Crowd Work. In 5th AAAI Conference on Human Computation and Crowdsourcing (HCOMP), 2017. See extended technical report: arXiv 1706.10097. [ bib | pdf | tech-report ]

- Yalin Sun, Pengxiang Cheng, Shengwei Wang, Hao Lyu, Matthew Lease, Iain Marshall, and Byron C. Wallace. Crowdsourcing Information Extraction for Biomedical Systematic Reviews. In 4th AAAI Conference on Human Computation and Crowdsourcing (HCOMP): Works-in-Progress Track, 2016. 3 pages. arXiv:1609.01017. [ bib | pdf ]

- Xiaoyu Zeng and Ruohan Zhang. Participatory Art Museum: Collecting and Modeling Crowd Opinions. AAAI 2017, pages 5017-5018. [pdf]

- James Cheng, Monisha Manoharan, Matthew Lease, and Yan Zhang. Is there a Doctor in the Crowd? Diagnosis Needed! (for less than $5). In Proceedings of the iConference, 2015.

- Yinglong Zhang, Jin Zhang, Matthew Lease, and Jacek Gwizdka. Multidimensional Relevance Modeling via Psychometrics and Crowdsourcing. In Proceedings of the 37th international ACM SIGIR conference on Research and Development in Information Retrieval, pages 435-444, 2014.

- Di Liu, Ranolph Bias, Matthew Lease, and Rebecca Kuipers. Crowdsourcing for Usability Testing. In Proceedings of the 75th Annual Meeting of the American Society for Information Science and Technology (ASIS&T), October 28-31 2012.

- Stephen Wolfson and Matthew Lease. Look Before You Leap: Legal Pitfalls of Crowdsourcing. In Proceedings of the 74th Annual Meeting of the American Society for Information Science and Technology (ASIS&T), 2011

- Adriana Kovashka and Matthew Lease. Human and Machine Detection of Stylistic Similarity in Art. In Proceedings of the 1st Annual Conference on the Future of Distributed Work (CrowdConf), San Francisco, September 2010

How to post your course paper online as a technical report? See an example from a previous semester.

- arXiv (Create new submission)

- Social Science Research Network (SSRN)

- UT CS Technical Reports (Create new submission)

Looking for a funded Research Assistant (RA) position? I typically do not offer RA positions until a student has taken a course with me and demonstrated their abilities and drive to succeed. While the availability of an RA position depends on available funding, I am often looking for new RAs to help me advance the current state-of-the-art in research.

About the instructor. Associate Professor Matthew Lease directs the Information Retrieval and Crowdsourcing Lab in the School

of Information at the University of Texas at Austin. He received his Ph.D. and M.Sc. degrees in

Computer Science from Brown University, and his B.Sc. in Computer Science from the University of

Washington. His research on crowdsourcing / human computation and information retrieval has been

recognized

with early career awards by NSF, IMLS, and others. Lease and co-authors received the Best Paper Award at the 2016 AAAI HCOMP for effective use of crowdsourcing to collecting high quality search relevance judgments. Lease has presented crowdsourcing tutorials

at ACM SIGIR, ACM WSDM, CrowdConf, and SIAM Data Mining (talk slides available online). From 2011-2013, he

co-organized the Crowdsourcing Track for

the U.S. National Institute of Standards & Technology (NIST) Text REtrieval Conference (TREC). In

2012, Lease spent the summer working on industrial-scale crowdsourcing at CrowdFlower.